Dynamic Data Analysis – v5.12.01 - © KAPPA 1988-2017

Chapter

3 – P ressure Transient Analysis (PTA)- p60/743

Despite the spread of more and more complex analytical models and various pseudo-pressures

developed to turn nonlinear problems into pseudo-linear problems accessible to these

analytical models, there was a point where analytical models would not be able to handle the

complexity of some geometries and the high nonlinearity of some diffusion processes. The

1990’s saw the development of the first numerical models dedicated to well testing, though

the spread of usage of such models only took place in the early 2000’s.

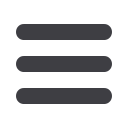

Fig. 3.A.14 – History match

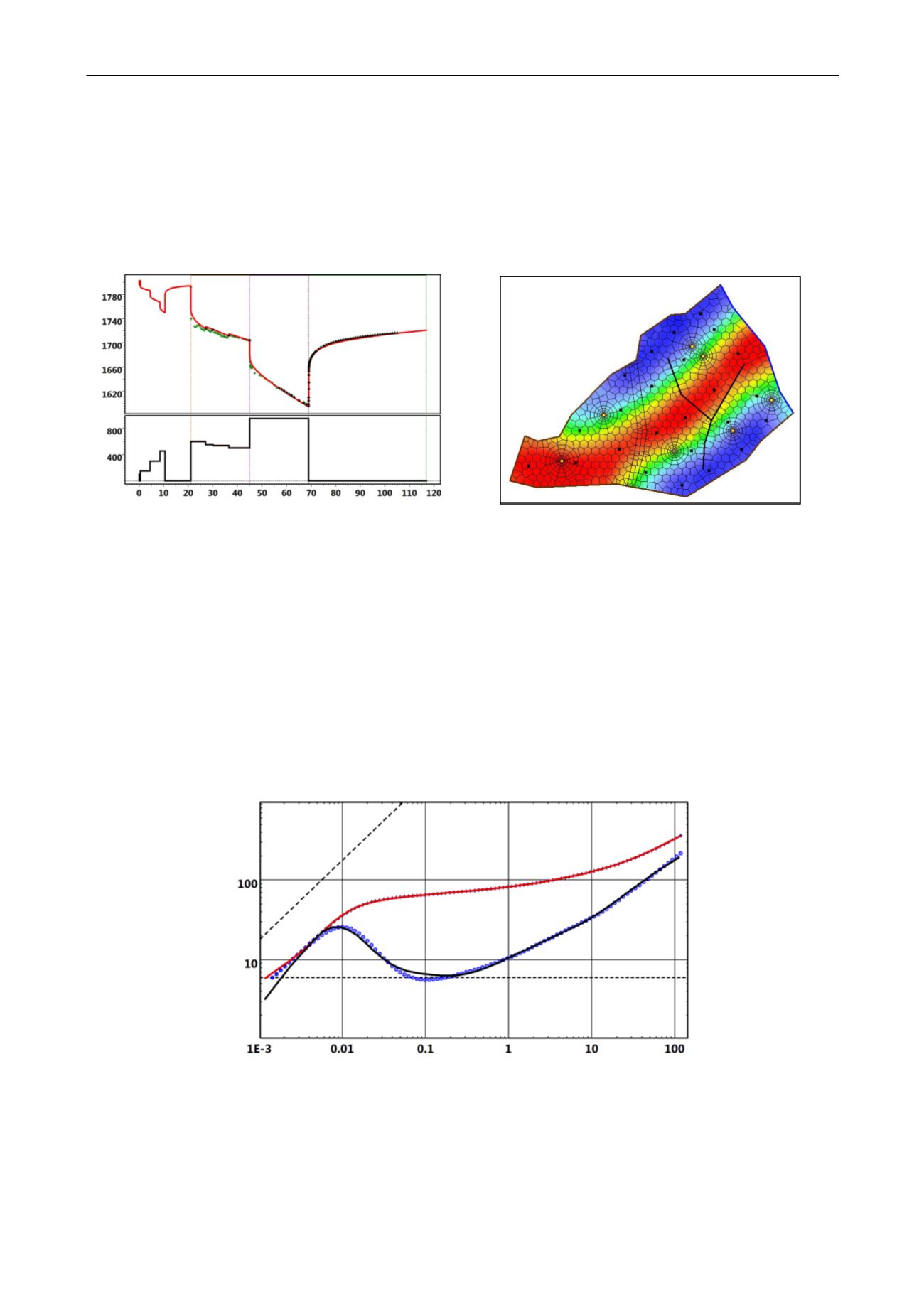

Fig. 3.A.15 – Numerical models

One would expect that most developments to come will be technology related, with more

powerful processing, higher graphics and higher amount of data available. For sure we are also

going to see in the years to come expert systems that will be able to approach the capacity of

human engineers, in a more convincing way than the work done on the subject in the 1990’s.

However the surprise is that we may also see fundamental methodology improvements. The

‘surprise’ of the past decade has been the successful publication of a deconvolution method

which, at last (but we caveats) can be useful to combine several build-ups in a virtual, long

and clean production response. Other surprises may be coming…

Fig. 3.A.16 – Deconvolution

In the next two sections we will make a clear distinction between the traditional tools (the ‘old

stuff’) and modern tools (the ‘right stuff’). Traditional tools had their use in the past, and they

are still in use today, but have become largely redundant in performing analysis with today’s

software. Modern tools are at the core of today’s (2016) modern methodology, and they are of

course on the default path of the processing.